The Ghost in the Machine Reclaiming sovereignty in the age of AI

“The intuitive mind is a sacred gift and the rational mind is a faithful servant. We have created a society that honors the servant and has forgotten the gift.” (attributed to Albert Einstein)

- The Paradox of the Unspoken

In the rapidly evolving landscape of 2026, we find ourselves confronting a philosophical paradox that has transitioned into a critical neurological problem. Sixty years ago, the polymath Michael Polanyi posited a simple yet profound dictum: “We know more than we can tell.” For decades, this concept of tacit knowledge, the skills, intuitions, and know-how that cannot be easily articulated or codified, was viewed as a philosophical curiosity. Today, however, it has become the ultimate moat protecting human expertise from total automation. As Artificial Intelligence accelerates toward agentic capabilities, capable of mining vast troves of explicit data and executing complex tasks, the university and the broader sphere of knowledge creation face an existential pivot. We are witnessing a transformation in the exchange between AI and human intelligence, where the value of explicit information is plummeting toward zero, while the value of tacit, embodied wisdom is becoming the new premium.

The current consensus in neuroscience and AI development suggests that we have four options: Humanity will be absorbed by AI (doomsday scenario); users avoid AI to create a counterculture (opt-out scenario); human and artificial intelligence will merge (‘If we can’t beat them, we merge with them’); and the less spectacular but undoubtedly most important in the context of knowledge development: an “escape scenario” that prevents the smooth convenience of AI from eroding our cognitive capacities and leading to a phenomenon known as cognitive debt.

This essay explores the necessity of the latter. It argues that the future of knowledge creation does not lie in competing with machines on the speed of data processing, but in doubling down on the biological architectures that machines cannot replicate: the messy, risk-laden, and deeply embodied intuitive processes that constitute the Ghost in the Machine.

- The Biological Architecture of Expertise

To understand why human expertise remains distinct from algorithmic calculation, one must look beyond philosophy to the biological hardware of the human mind. Contemporary neuroscience has validated Polanyi’s theory through the lens of Dual-Process Theory and Embodied Cognition, confirming that knowing is not a singular operation. There is a fundamental neuro-anatomical divergence between knowing that (declarative memory) and knowing how (procedural memory).

Declarative knowledge (facts, figures, and logic) is processed primarily in the prefrontal cortex and the language centers of the brain. This is the domain of the Explicit; it is the data that can be written down, digitized, and fed into a Large Language Model (LLM). However, procedural knowledge, the ability to ride a bicycle, the surgeon’s feel for tissue tension, or the chemist’s sense of a reaction’s tipping point, resides in the basal ganglia and the cerebellum. These ancient brain structures operate largely outside the scope of language. We literally cannot put these skills into words because they function through different neural pathways than those used for speech or writing.

Human decision-making is described by the Somatic Marker Hypothesis, a foundational concept in neurobiology which posits that what we call gut feelings are actually physiological responses to patterns our subconscious has recognized. When an expert perceives a risk or an opportunity, their brain reads the body’s internal bio-signals (a tightening of the stomach, a change in heart rate) to inform the decision before the conscious mind can articulate a reason. This biological feedback loop is essential for high-stakes decision-making and ethical judgment.

Furthermore, this form of knowledge transfer relies on Neural Coupling. Research indicates that during intense master-apprentice interactions, the brainwaves of the two individuals actually synchronize. This synchronization facilitates the transfer of tacit knowledge through shared presence and experience, a rich, biological bandwidth that text-based AI, regardless of its processing speed, simply cannot replicate.

III. The Tacit-Explicit Gap and the Limits of AI

In 2026, the technological frontier is defined by the attempt to codify the uncodifiable, leading to what experts call the Tacit-Explicit Gap. We have moved beyond simple chatbots to Agentic AI systems. These agents do not merely answer questions; they build context graphs that map the reasoning behind decisions and use case-based reasoning to mine unstructured data such as emails, notes, transcripts in an attempt to turn tribal knowledge into searchable playbooks. By analyzing thousands of hours of expert behavior, such as film editing or surgical procedures, these systems can simulate the appearance of intuition by identifying statistically likely patterns in messy data.

However, despite these advancements, a fundamental barrier remains: the lack of an umwelt. In ethology, an umwelt is the self-centered world of an organism, its subjective sensory experience. AI lacks a biological body and, consequently, lacks a subjective sensory world. A Quantum AI might be able to calculate a wave function with perfect accuracy, but it has never felt the tension of an experiment or the weight of a cinematic frame.

This limitation extends to the concept of Skin in the Game. Tacit knowledge is often forged in the fires of risk. A human’s intuition is sharpened by the physical, social, or professional consequences of being wrong. The biological markers of stress and conviction are catalysts for intuitive breakthroughs. An AI, having no body to lose and no social standing to risk, cannot experience the stress that sharpens the human mind. It can calculate probabilities, but it cannot possess conviction. This distinction creates the Tacit Edge: while explicit knowledge becomes a commodity that anyone can prompt an AI to generate, the human expertise that resides in the unspoken and the embodied becomes the true value of the human contributor.

- The Crisis of Cognitive Debt

If the biological distinction of human intelligence is clear, the danger lies in our increasing willingness to bypass it. The integration of frictionless AI into education and research has precipitated a crisis of Cognitive Debt. Just as financial debt borrows from future earnings to fund current consumption, cognitive debt borrows from future neural capability to fund current productivity.

Empirical studies, such as those from the MIT Media Lab in 2025, have provided alarming evidence of this phenomenon. Research indicates a massive decrease in functional neural connectivity (up to 55%) in groups that rely heavily on AI for cognitive tasks. Furthermore, these groups demonstrate a staggering failure rate in immediate recall, often unable to quote or explain the very work they seemingly produced.

This can be described as a hollowing of the mind. When we outsource the struggle of formulating thoughts to an algorithm, we are not just saving time; we are dismantling the neural architecture required for deep thinking. We risk producing a generation of passengers rather than pilots such as leaders and creators who suffer from automation bias, blindly trusting the output of algorithms because they lack the internal somatic markers to detect when the machine is wrong.

The danger is further compounded by algorithmic indoctrination. If we internalize explicit knowledge that contains the inherent biases of an AI model, those biases become part of our gut feeling. A medical researcher might intuitively make a prejudiced diagnosis, or a filmmaker might instinctively reject an avant-garde idea, unaware that their intuition has been flattened by a dataset trained on the average of human output. The result is a loss of originality, the unique, eccentric deviations that drive true innovation.

- Strategic Friction: A New Pedagogy for the Mind

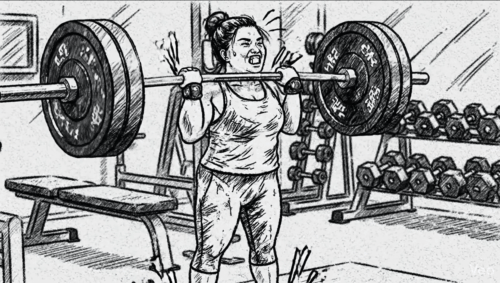

To counter this existential threat, the university and the knowledge economy must pivot from a model of efficiency to a model of Strategic Friction. The goal is no longer to make learning as easy as possible, but to re-introduce desirable difficulty that forces the brain out of its autopilot mode (System 1) and into active, intuitive deliberation (System 2). This approach views the educational environment not as a content delivery system, but as a training hall for the mind, designed to build Epistemic Resilience.

For decades, STEM (Science, Technology, Engineering, and Mathematics) served as the “hard” currency of the university precisely because it dealt with the measurable and the predictable. However, the emergence of AI as a “Logic Engine” has precipitated a crisis for this model. The problem lies in the fact that AI is essentially a high-speed “Explicit Knowledge Machine”. Whether calculating a bridge’s stress, sequencing a genome, or writing code, these systems operate faster and more accurately than human counterparts.

Consequently, when the “Right Answer” is available for the cost of an API call, the market value of the person who simply knows the right answer drops to zero. Traditional STEM education, which has long focused on “Declarative Knowledge” (the “What” and the “That”) is effectively being automated out of existence.

In an economy increasingly governed by probability, the “Improbable” (the domain of the Humanities) becomes the highest-value asset. Large Language Models (LLMs) are designed to predict the “most likely” next token, rendering them inherently average, safe, and logical. In contrast, HAS is the Domain of the “Outlier”. Disciplines such as literature, theater, and philosophy study the exception to the rule: the irrational motive, the existential crisis, and the revolutionary spark. Therefore, the Humanities serve a critical function.

A new pedagogy integrated these specific qualities of HAS and rests on Cognitive Forcing Functions or protocols designed to break the reliance on the path of least resistance.

- The Wait and Compare Protocol The first line of defense is the preservation of the gap between human impulse and machine output. In this protocol, students or researchers are forbidden from consulting an AI immediately. They must first formulate a hypothesis, draft a strategy, or sketch a design using only their natural intelligence. This Wait and Compare method trains authorship. It teaches the individual that their unique value lies not in the correct answer, but in the irrational or counterintuitive ideas that a probability-based machine would filter out. Only after the human strategy is defined can the tool be engaged.

- The Blind Spot Bridge To operate effectively in a world of complex systems, we must bridge the divide between the calculating mind (STEM) and the narrative mind (Humanities/Arts/Social Sciences – HAS). The Blind Spot Bridge is a transdisciplinary mechanism that operationalizes this integration.

The process begins with the STEM Anchor, where a student must visualize a complex problem such as an economic shift or a supply chain failure, using a Causal Loop Diagram. This must be done in 60 seconds, without notes, forcing the brain to rely on systems intuition rather than rote memorization.

This is immediately followed by the HAS Intervention. The student must adopt an adversarial persona or a character or viewpoint that directly contradicts their initial logical conclusion. This forces intellectual sympathy and internal “red-teaming”, requiring the student to inhabit the lived experience of another to grasp truths that logic misses.

Only then does the synthesis occur. The student prompts the AI and compares their internal intuitive map against the machine’s rational list. The learning happens in the resilience gap: Where did the AI miss a nuance the human felt? Where did the human miss a fact the AI provided?. This protocol prevents the siloing of skills and ensures that the human remains the architect of insight rather than a mere consumer of information.

- The Intuition Veto In a future governed by probability, the most critical leadership skill is the ability to say no to a calculation that is mathematically correct but ethically wrong. The Intuition Veto trains students to conduct a Human-Values Audit (HVA). If a graduate feels a somatic marker—a gut feeling of wrongness—regarding an AI output, they are trained to exercise the irrationalist veto. This prioritizes moral authorship over efficiency, acknowledging that human values often require overriding machine logic to protect dignity and care.

- Designing Spaces for Resistance

The philosophy of strategic friction must be encoded into the very physical walls of the university. The campus of the future is not a smart city of seamless connectivity; it is a cognitive training hall defined by resistance.

To combat the constant predictive nudges of digital devices, we must construct Analog Vaults. These are data-free zones, physically shielded by Faraday cages to block all Wi-Fi and cellular signals. These sanctuaries force deep duration, the capacity to focus on a single complex problem for hours without interruption. In these spaces, the rule is analog tools only: whiteboards, clay, paper, but no screens.

Furthermore, the architecture itself should be counterintuitive. Borrowing from the Shared Space concept in urban planning, the removal of excessive signage and rational instructions forces individuals to rely on social intuition and awareness to navigate. In high-stakes simulation rooms, touchscreens should be replaced with heavy, tactile levers and physical switches. The physical effort required to engage these mechanisms breaks the cognitive tunneling associated with smooth glass screens, reconnecting the motor cortex with the reasoning mind.

The verification of knowledge must also shift from the private to the public. The oral defense replaces the written essay as the primary mode of assessment. Students must stand and defend their logic in real-time against human critique. This ensures that knowledge is internalized and hallucination-proof, as one cannot outsource an oral defense to a chatbot.

VII. Internalizing the Machine

The ultimate goal of this friction-based approach is to expand and enrich tacit knowledge. In the current era, the world is obsessed with externalization, mining human expertise to train AI. The sovereign individual of the future, however, will be focused on internalization, taking the cold, explicit outputs of AI and absorbing them back into biological intuition.

This process moves knowledge through the stages of competence, from the explicit phase (reading an AI report) through an internalization bridge (strategic friction) to the implicit phase, where knowledge becomes second nature, stored in the basal ganglia.

The arts act as internalization conductors to solve the “bandwidth problem, moving knowledge from low-bandwidth screens directly into the person. For instance, in the life sciences, students do not just study a protein fold but choreograph it, using the body to represent molecular forces and moving data into the Sensorimotor Cortex. Similarly, in Data Science, students utilize sonic anchors to listen to datasets, turning variables like heart rate variability into rhythmic loops to internalize the data.

The humanities provide the essential ends to the means supplied by STEM. In an era of “Black Box” algorithms, the humanities provide the tools for a human-Values audit, training students to exercise an intuition veto when they feel a somatic sense of wrongness regarding a mathematically perfect AI output.

VIII. The Pattern Breaker and the Future of Leadership

By embracing Strategic Friction, the university produces a distinct type of leader: the Pattern Breaker. Artificial Intelligence is, by definition, a pattern-repeater; it predicts the most likely next token based on historical data. The human expert, trained in the training hall of the mind, is a pattern-breaker, capable of using intuition to identify the anomaly, the vital error, or the unique deviation that leads to innovation.

This pattern breaker or augmented polymath possesses hyper-pattern recognition and cognitive independence. Their knowledge is offline, permanent, and resilient against power outages or subscription changes. They have internalized the complexity of the machine age without surrendering their biological agency.

- Conclusion: The Sovereignty of the Mind

The transformation of the exchange between AI and human intelligence demands a radical rethinking of how we value and create knowledge. We are moving from an era where the accumulation of explicit facts was power, to an era where the cultivation of implicit, tacit wisdom is liberty.

If we continue to view AI as a prosthetic that replaces thought, we face Nietzsche’s Last Man scenario, a world of passive observers watching an automated reality. However, if we view AI as a training weight or source of resistance against which we sharpen our biological faculties we can reclaim our status as creators.

The Forum Internum (internal tribunal) as the inner sanctuary of the mind must be protected not just by laws, but by practice. By prioritizing the unspoken, enforcing Strategic Friction, and designing our physical and intellectual environments to demand deep duration, we ensure that the smartest machine in the room is not the computer, but the human being who commands it. We know more than we can tell, and the mission of the future university is to ensure we never forget how to know it.